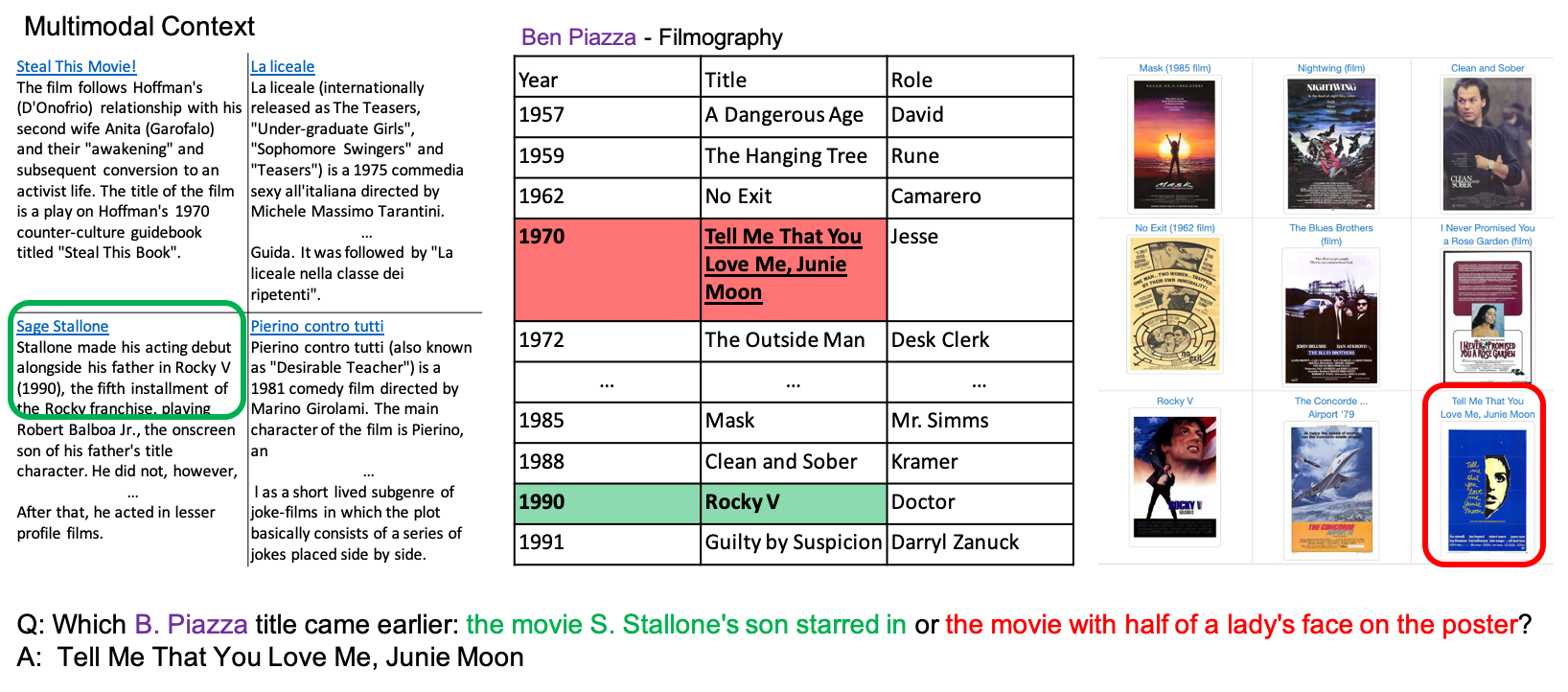

Cross modal questions over tables, text and images

MultiModalQA is a challenging question answering dataset that requires joint reasoning over text, tables and images, consisting of 29,918 examples.

This dataset was created by a team of NLP researchers at Tel Aviv University, University of Washington and Allen Institute for AI.

For more details check out our ICLR21 paper “MultiModalQA: Complex Question Answering over Text, Tables and Images”,

Paper

MultiModalQA: Complex Question Answering over Text, Tables and Images

Alon Talmor, Ori Yoran, Amnon Catav, Dan Lahav, Yizhong Wang, Akari Asai, Gabriel Ilharco, Hannaneh Hajishirzi, Jonathan Berant

ICRL 2021

@inproceedings{ talmor2021multimodalqa, title={MultiModal{\{}QA{\}}: complex question answering over text, tables and images}, author={Alon

Talmor and Ori Yoran and Amnon Catav and Dan Lahav and Yizhong Wang and Akari Asai and Gabriel Ilharco and Hannaneh Hajishirzi and Jonathan

Berant}, booktitle={International Conference on Learning Representations}, year={2021}, url={https://openreview.net/forum?id=ee6W5UgQLa}

}

Download

- For the full documentation of the dataset and its format please refer to our Github repository.

- Click here to see MultiModalQA dataset.