Structured Set Matching Networks for One-Shot Part Labeling

Jonghyun Choi, Jayant Krishnamurthy, Aniruddha Kembhavi, Ali Farhadi

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2018

Abstract

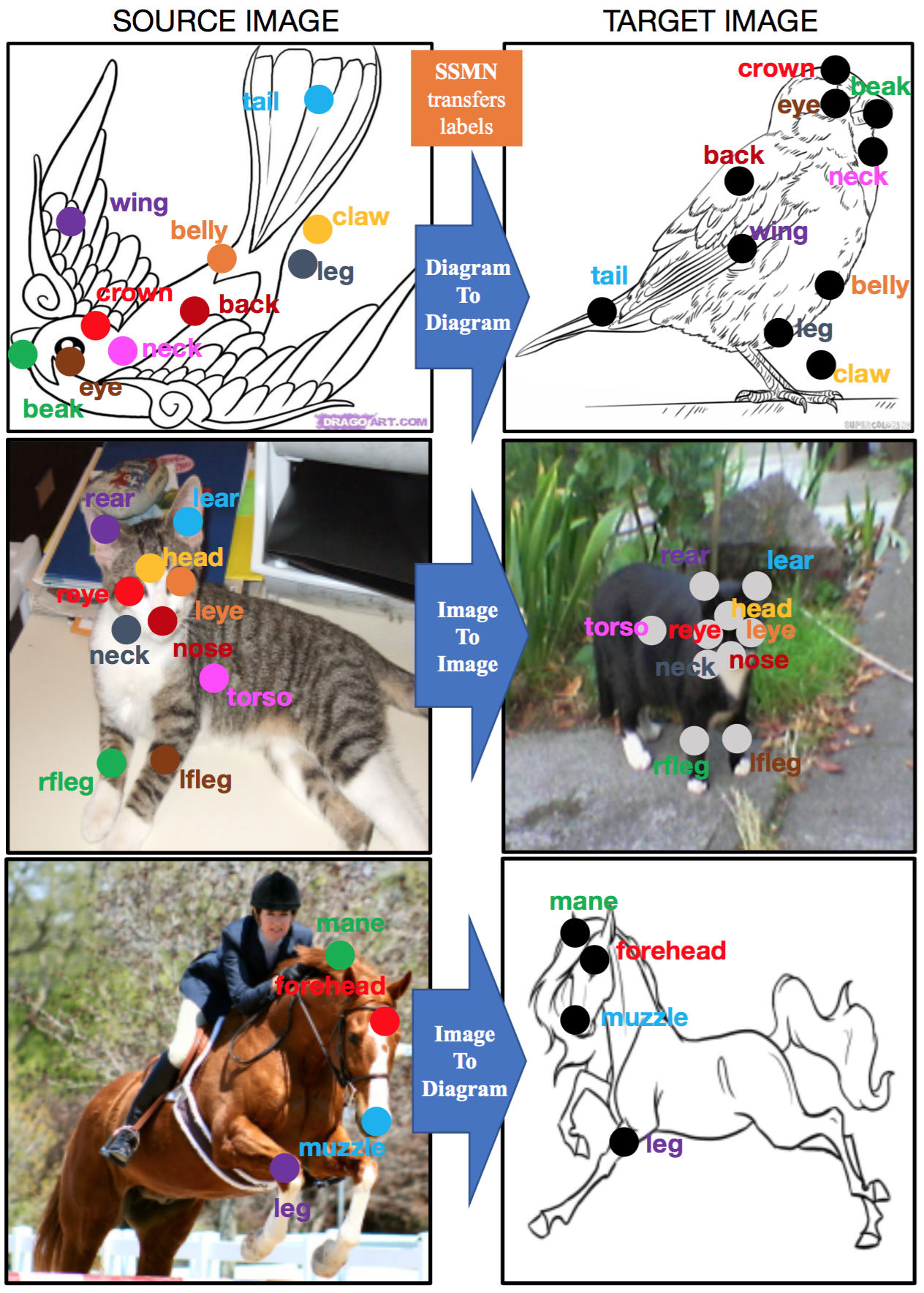

Diagrams often depict complex phenomena and serve as a good test bed for visual and textual reasoning. However, understanding diagrams using natural image understanding approaches requires large training datasets of diagrams, which are very hard to obtain. Instead, this can be addressed as a matching problem either between labeled diagrams, images or both. This problem is very challenging since the absence of significant color and texture renders local cues ambiguous and requires global reasoning. We consider the problem of one-shot part labeling: labeling multiple parts of an object in a target image given only a single source image of that category. For this set-to-set matching problem, we introduce the Structured Set Matching Network (SSMN), a structured prediction model that incorporates convolutional neural networks. The SSMN is trained using global normalization to maximize local match scores between corresponding elements and a global consistency score among all matched elements, while also enforcing a matching constraint between the two sets. The SSMN significantly outperforms several strong baselines on three label transfer scenarios: diagram-to-diagram, evaluated on a new diagram dataset of over 200 categories; image-to-image, evaluated on a dataset built on top of the Pascal Part Dataset; and image-to-diagram, evaluated on transferring labels across these datasets.

Paper

[PDF] [Supplementary Material]

Citation

@article{choiKKF18,

author = {Jonghyun Choi, Jayant Krishnamurthy, Aniruddha Kembhavi, Ali Farhadi},

title={Structured Set Matching Networks for One-Shot Part Labeling},

journal={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2018},

}

Datasets

- DiPART dataset: Download (850.8 MB)

- Pascal Part Matching dataset: Download (262.4 MB)

- Cross-DiPART-PPM dataset: Download (127.0 MB)

Contact

Jonghyun Choi (jonghyunc@allenai.org)